📚 Full list of publications

Here is a link to all of our research publications.

💾 Datasets

As part of the project, we open source some of the datasets that were used in our research.

🔎 Research highlights

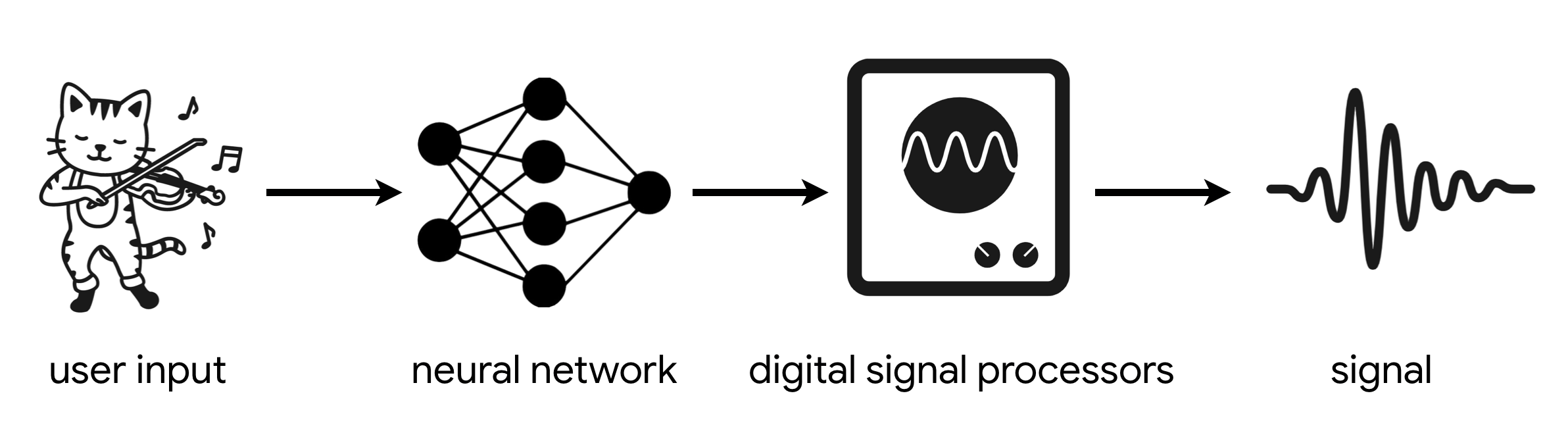

DDSP

A library that lets you combine the interpretable structure of classical DSP elements (such as filters, oscillators, reverberation, etc.) with the expressivity of deep learning.

Papers

Blog Posts

- DDSP: Differentiable Digital Signal Processing

- Tone Transfer

- Stepping Towards Transcultural Machine Learning in Music

Colab Notebooks

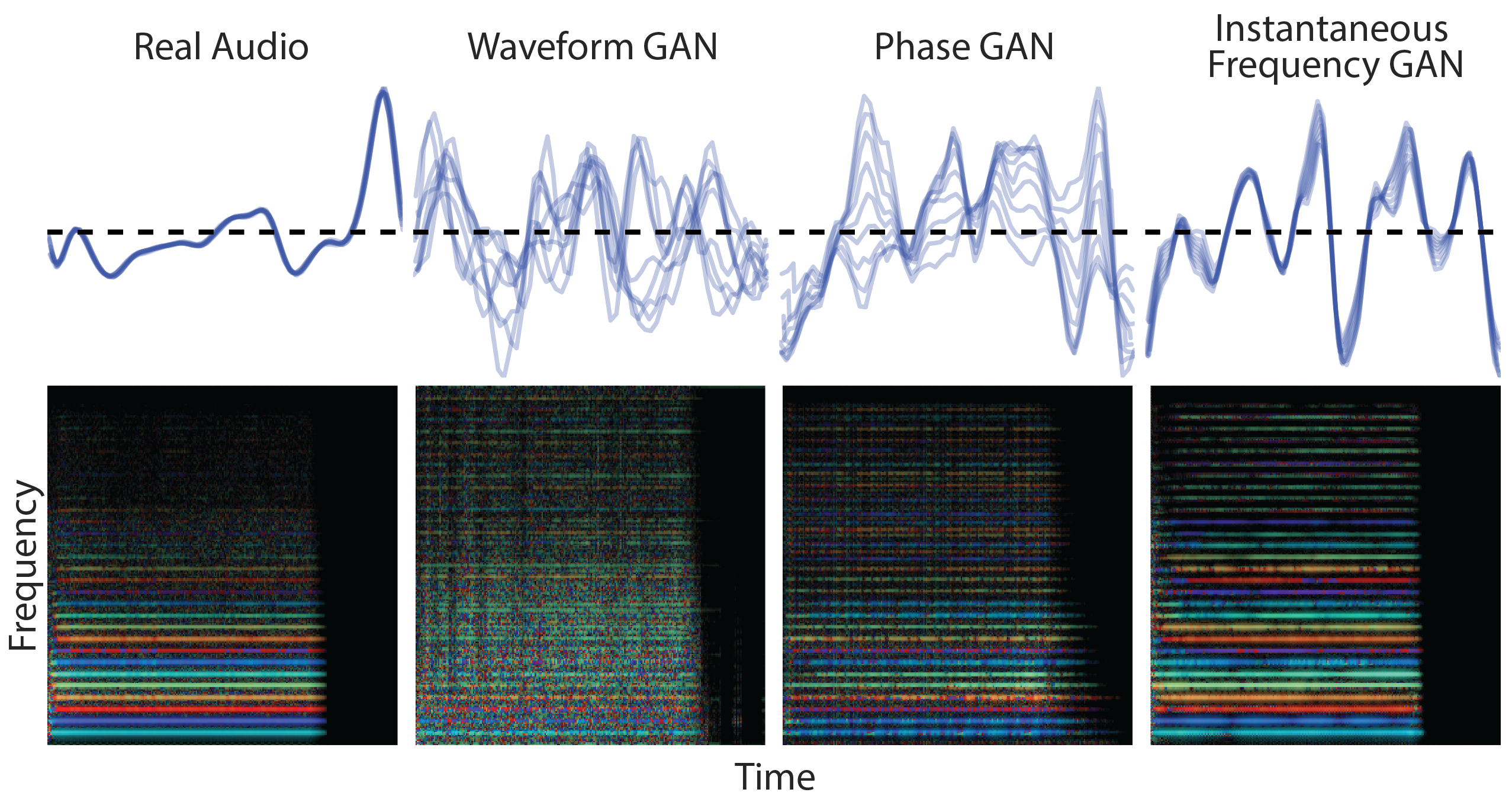

GANSynth

A method to synthesize high-fidelity audio with GANs.

Papers

Blog Posts

Colab Notebooks

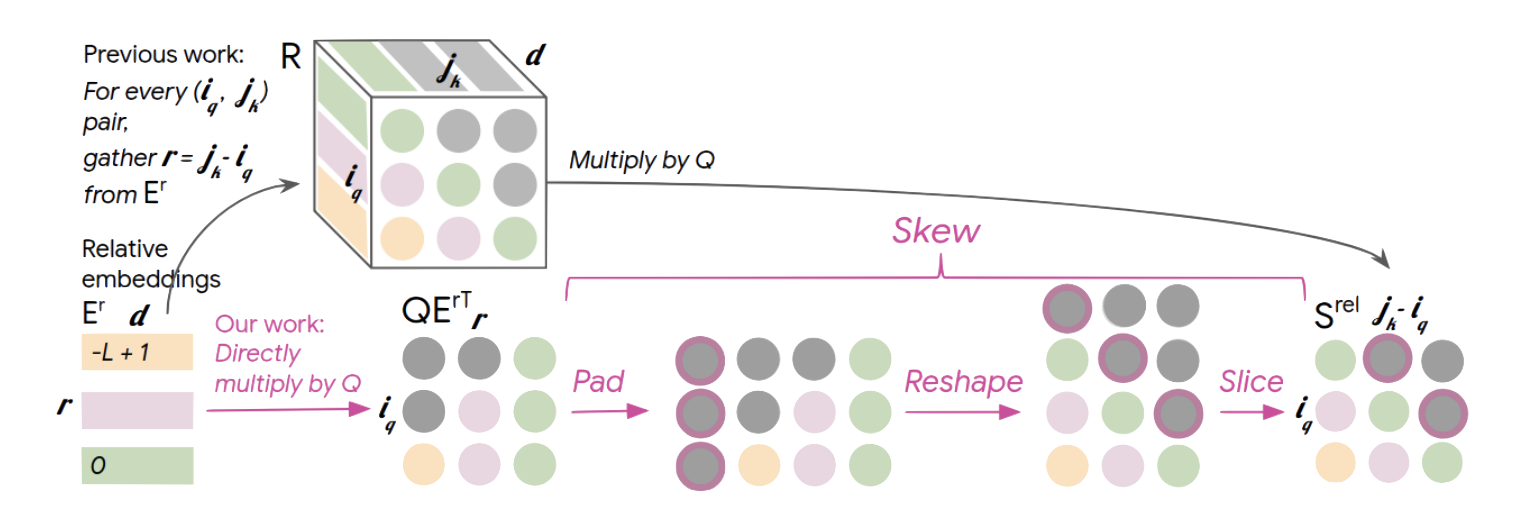

Music Transformer

A self-attention-based neural network that can generate music with long-term coherence.

Papers

Blog Posts

- Music Transformer: Generating Music with Long-Term Structure

- Generating Piano Music with Transformer

- Encoding Musical Style with Transformer Autoencoders

- Listen to Transformer

- Making an Album with Music Transformer

Colab Notebooks

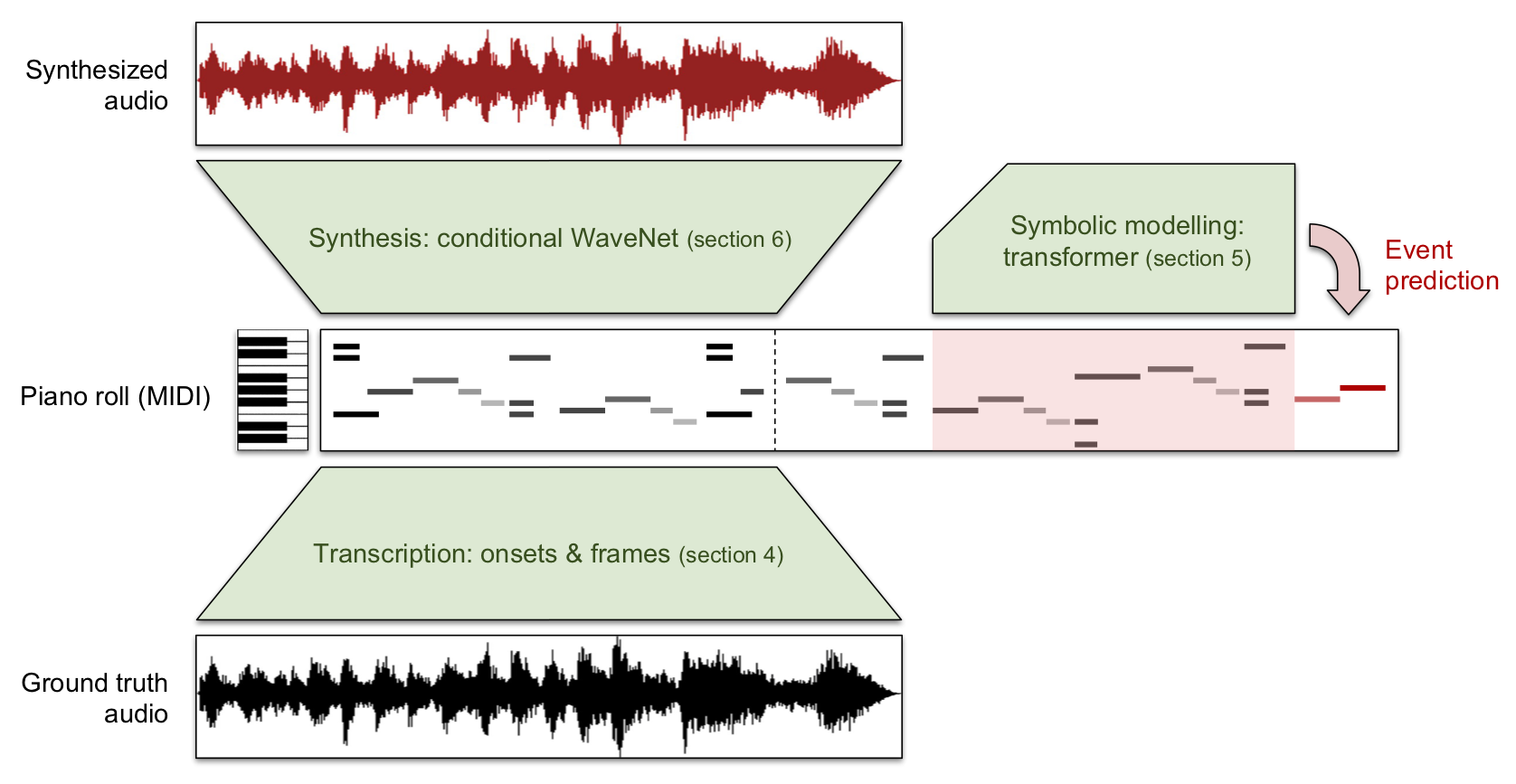

Wave2Midi2Wave

A new process able to transcribe, compose, and synthesize audio waveforms with coherent musical structure on timescales spanning six orders of magnitude (~0.1 ms to ~100 s).

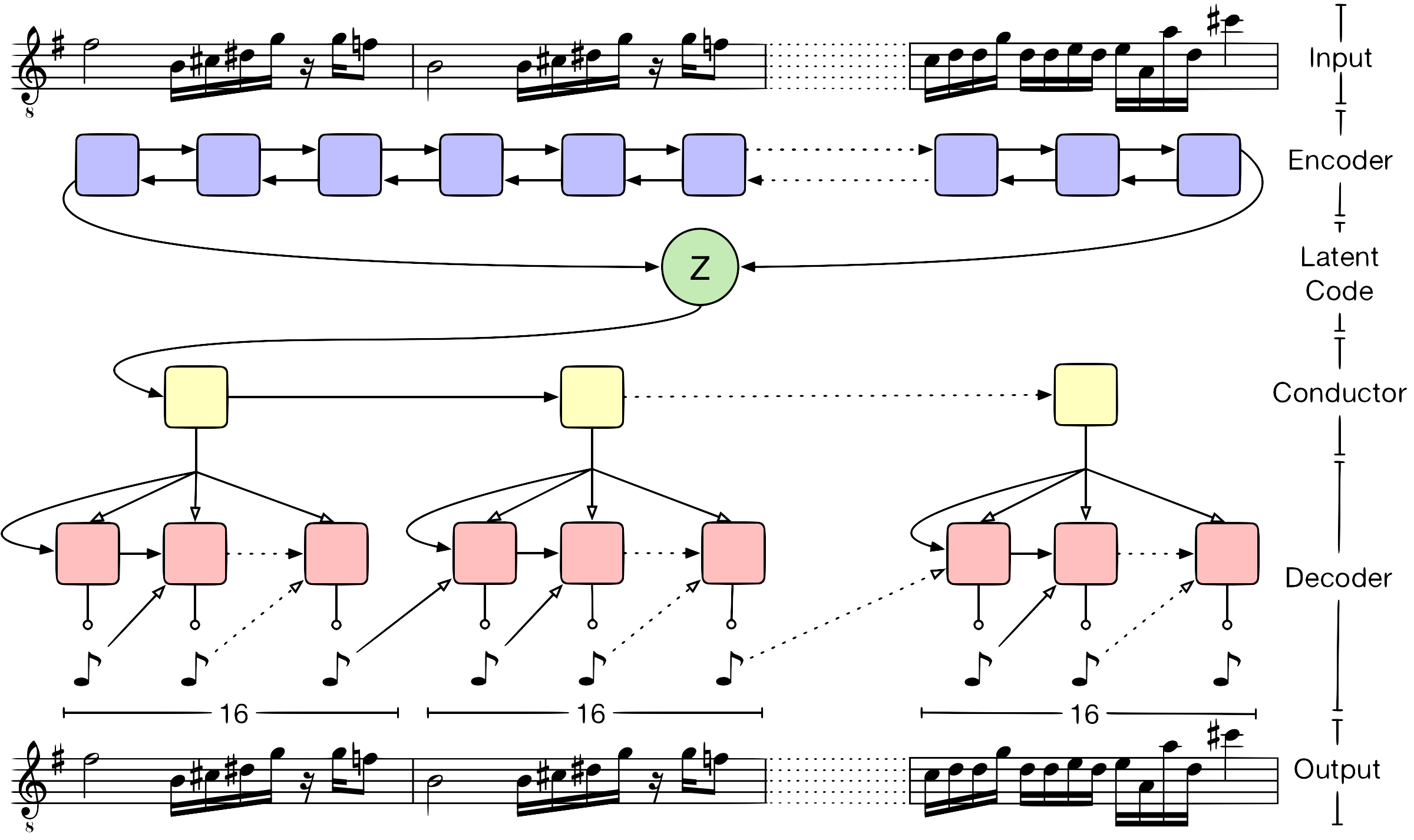

Music VAE

A hierarchical latent vector model for learning long-term structure in music

Papers

- A Hierarchical Latent Vector Model for Learning Long-Term Structure in Music

- Learning a Latent Space of Multitrack Measures

- MidiMe: Personalizing a MusicVAE model with user data

Blog Posts

- MusicVAE: Creating a palette for musical scores with machine learning.

- Multitrack MusicVAE: Interactively Exploring Musical Styles

- YACHT's new album is powered by ML + Artists

Colab Notebooks

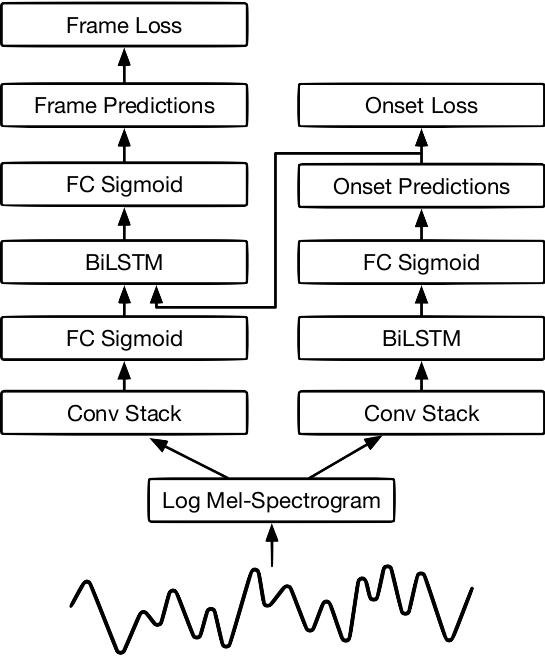

Onsets and Frames

We advance the state of the art in polyphonic piano music transcription by using a deep convolutional and recurrent neural network which is trained to jointly predict onsets and frames.

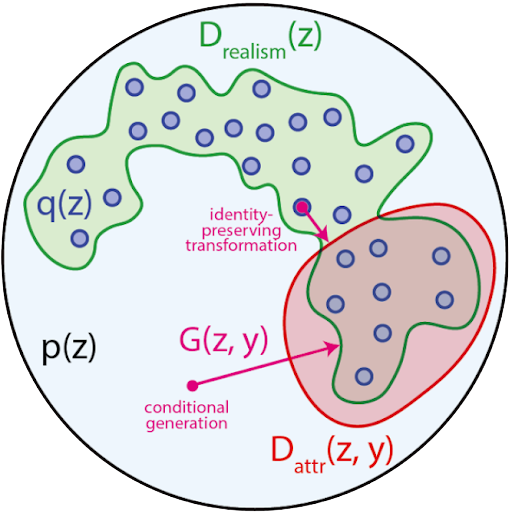

Latent Constraints

A method to condition generation without retraining the model, by post-hoc learning latent constraints, value functions that identify regions in latent space that generate outputs with desired attributes. We can conditionally sample from these regions with gradient-based optimization or amortized actor functions.

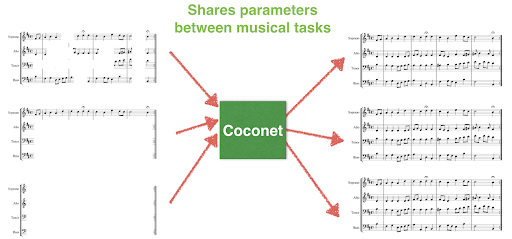

COCONET

An instance of orderlessNADE, Coconet uses deep convolutional neural networks to perform music inpaintings through Gibbs sampling.

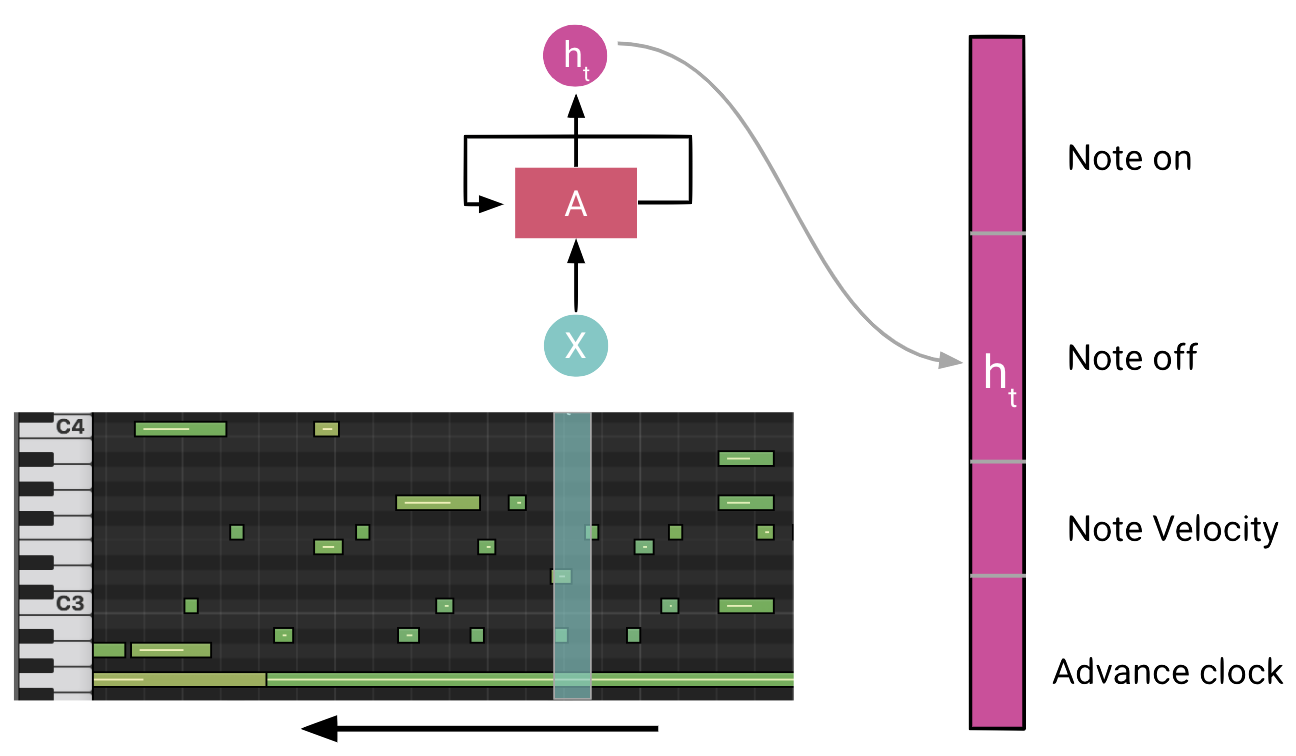

Performance RNN

An LSTM-based recurrent neural network designed to model polyphonic music with expressive timing and dynamics.

Sketch RNN

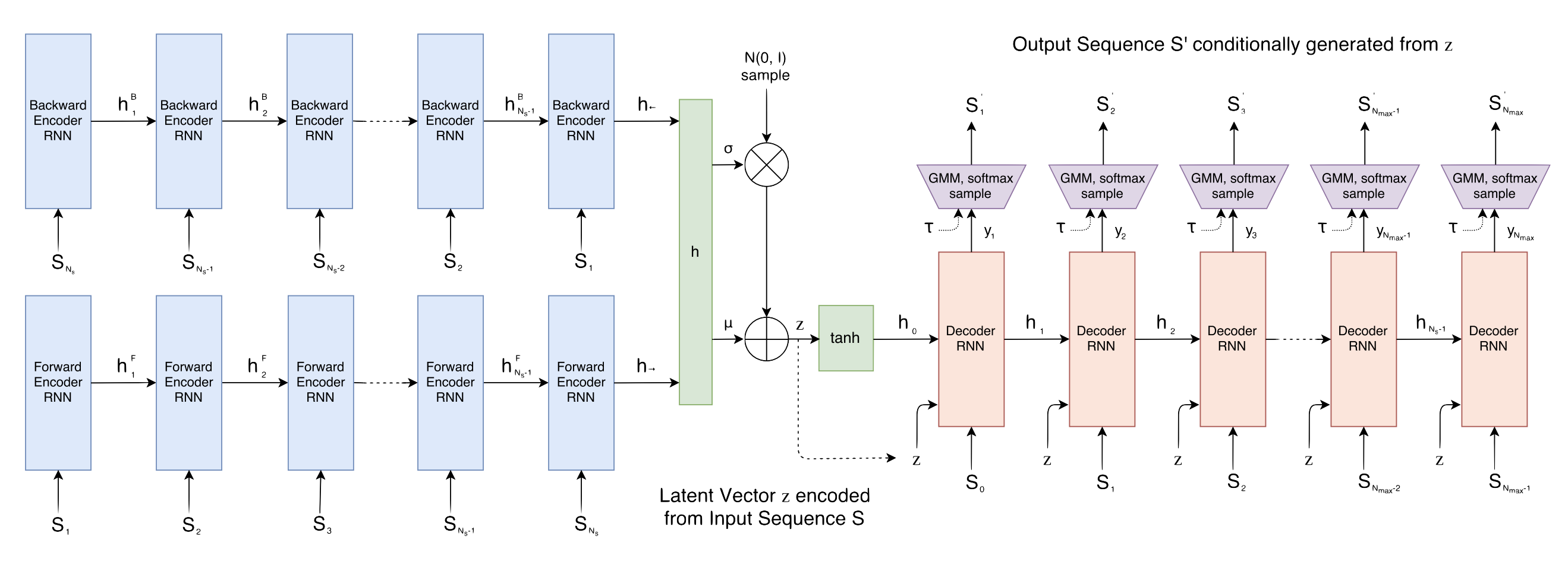

A recurrent neural network (RNN) able to construct stroke-based drawings of common objects. The model is trained on thousands of crude human-drawn images representing hundreds of classes.

Papers

- A Neural Representation of Sketch Drawings

- collabdraw: An environment for collaborative sketching with an artificial agent

Blog Posts

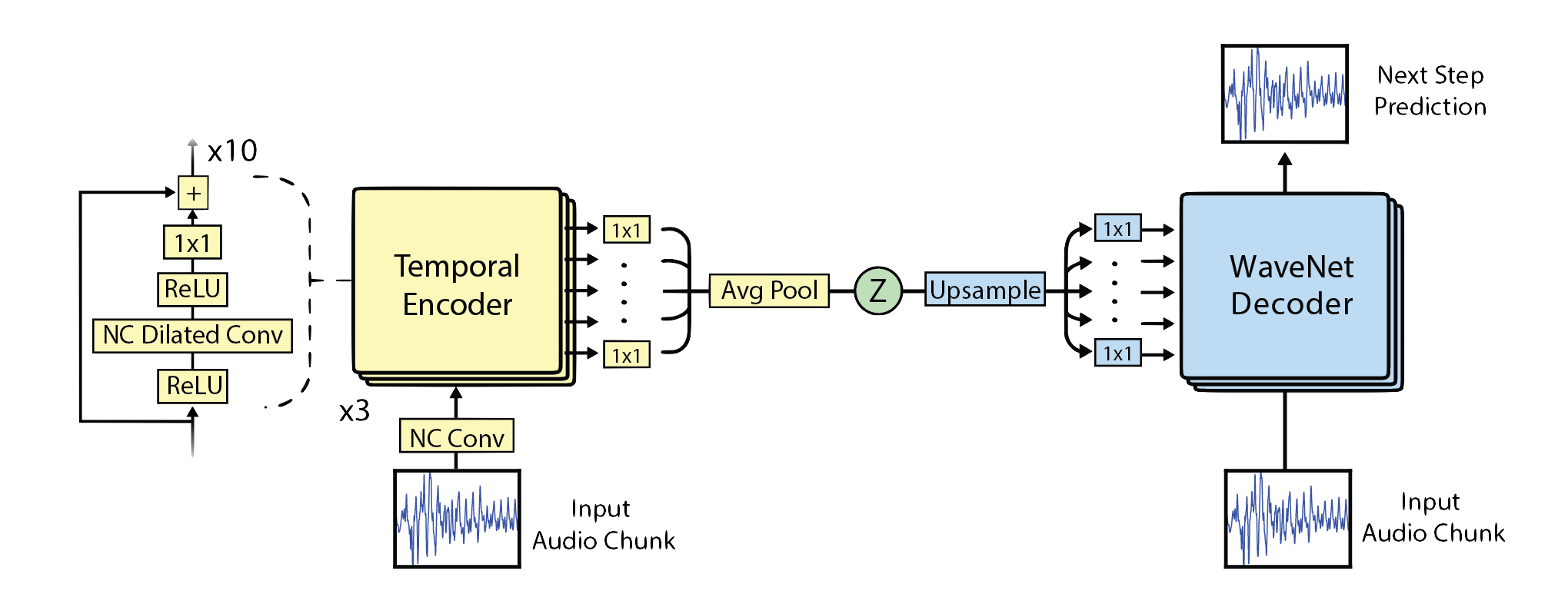

NSynth

A powerful new WaveNet-style autoencoder model that conditions an autoregressive decoder on temporal codes learned from the raw audio waveform.

Papers

Blog Posts

- NSynth: Neural Audio Synthesis

- Making a Neural Synthesizer Instrument

- Generate your own sounds with NSynth

- Using NSynth to win the Outside Hacks Music Hackathon 2017

- Hands on, with NSynth Super